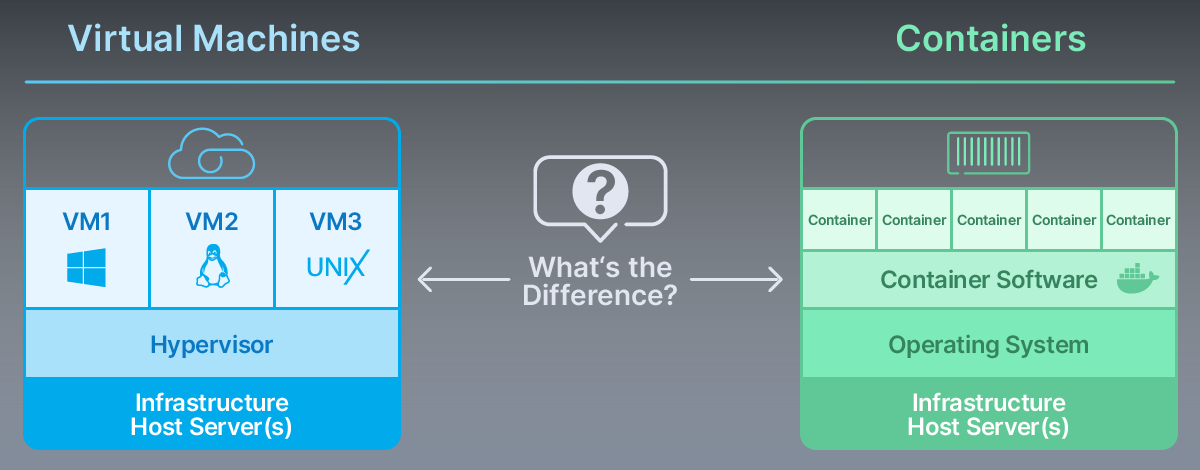

Both virtual machines and containers are routinely used for handling cloud workloads. Our previous article described how virtual machines work, today we’re going to focus on their lightweight relative – containers. But before we start with containers, let’s quickly sum up the previous article:

What are Virtual Machines, Again?

Virtual machines are fully independent virtual computers that allow you to perform all necessary operations in the same way as on your non-virtual OS. Virtual machines have their own hardware capacity – a certain number of CPUs, RAM and many other parameters.

We explained that modern virtualization is performed with a software called hypervisor. Hypervisors are responsible for distributing hardware resources to guest machines – sometimes one virtual machine uses more resources, sometimes the other one demands more computing power. Because it’s easy to create many virtual machines on one server and share resources, virtualization has become a way to reduce company’s expenses. Instead of purchasing the whole physical server, it’s possible to order just a small slice of the overall capacity in the form of VPS.

There are many different terms describing the same concept – virtual machines, virtual computers, cloud instances, VPS. Some companies have their own product names like Amazon’s EC2 or Digital Ocean’s Droplet.

If you haven’t done so already, we recommend that you read our article on virtualization first. It will help you to better understand the containers as well.

What is a Linux Container?

Containers are a form of virtualization that is more lightweight and minimalistic than virtual machines. Containers are native for Linux – they are built directly into its kernel, and they use the same resources as the host OS. To some extent running containers is similar to running any other application on your Linux

Installing a container can be done with a single command – the system downloads a distribution image and installs it on the computer. Then the kernel starts allocating resources to this new instance. Linux kernel plays the same role as hypervisor with virtual machines – it sends only that much power to individual containers that they really need.

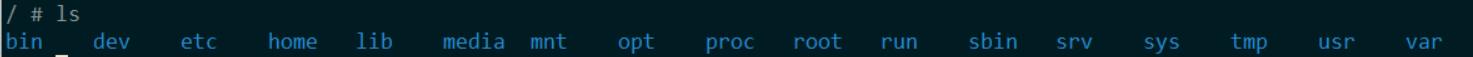

At first glance, containers look like fully fledged virtual instances. For instance, the popular Alpine image contains the familiar home, root, proc and other folders:

However, containers are not a fully independent operating system. They are just a process running on the Linux kernel of the host system. They contain only the bare essentials that allow them to run your chosen application. For example, the aforementioned Alpine container has by default only 5MB, while the image for the whole operating system usually takes several Gigabytes (3GB in case of a standard Ubuntu 20.04 image). Containers are portable and run in the same way no matter what computer they are currently installed on.

Containers are an important part of the modern DevOps approach, allowing developers to release products faster and update them continuously. You will learn more about DevOps in the next article of Cloud Explained Series.

Containers vs Virtual Machines

In the last several years, many companies have moved their applications from virtual machines to containers. Yet despite all the hype, virtual machines are not likely to be fully substituted by containers in the future. Those are complementary technologies, not rivals – containers and virtual machines are often used in the same cloud infrastructure.

| VMs | Containers | |

| Image | All included | Bare essentials |

| Kernel | Independent of Host System OS | Shared with the Host System OS |

| Provisioning | In minutes | In seconds |

| Virtualization | Hardware-based | OS-based |

Let’s look closer at some of the advantages and disadvantages of both containers and virtual machines:

Virtual Machines: The Whole OS

Container images contain just the bare essentials, while VM runs on an operating system that is equal to a standard physical computer. VMs are more suitable in providing the whole virtual environment, for example as our VPS instances.

Containers: Less Storage Required

Some estimates say that you can run as many as four-to-six times more containers occupying the same space that would normally take virtual machines using Xen or KVM hypervisors.

Containers: Instantly Ready

Containers are quickly installed by just one or a couple of commands. Since they use the kernel of the host OS, there is no delay caused by booting when starting a container.

Containers: Uniform Environment

Containers behave consistently on every computer.

Decision factor: When is Container an (Dis)Advantage

Testing apps

Containers allow developers to quickly test new applications on many different operating systems. Installing a new version of an operating system is much faster than with virtual machines.

Microservice Architecture

Containers are perfectly suited to serve as tiny “one task” instances. But microservice architecture often relies on a mix of virtual machines and containers.

Databases

Using containers to deploy traditional databases like MySQL can be complicated:

Expert’s opinion: Tino Lehnig, Cloud Architect

“Containers work best for applications that are state-less, meaning they don’t save any persistent data inside. Similar applications can be scaled easily just by deploying more of the same code. Database apps are the polar opposite – database entries need to be permanently available and scaling requires careful configuration. There is dedicated database orchestration software that is already designed to take this factor into consideration. Adding container orchestration to this mix is usually not worth the effort.”

Docker

Docker is currently the most popular container engine on the market. It has gradually replaced the old LXC and other engines. While there are other viable alternatives today, it’s still hard to imagine talking about containers without mentioning Docker. Before we start, let’s clarify three key terms:

Important Terms

Parent image – is the read-only standard image you download first. That can be anything like Ubuntu OS container, LAMP container, WordPress container…

Image – once you start working on the parent image, it becomes your own project. This is the one you share with your colleagues.

Container – is the environment itself for running all the images. Containers cannot run without an image.

How do Developers Use Docker?

To get you a better idea, let’s look at a typical setup using Docker. Let’s assume in this example that we don’t use any container services, as we always encourage our customers to rent a VPS, VDS or Dedicated Server and build containerization in-house:

1 Installing Docker

Although containers use a technology that is already included in every Linux, users have to install the container engine first. The installation can be done easily via command line based on the particular OS.

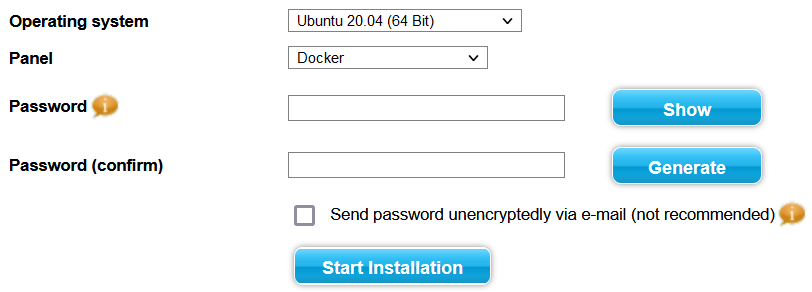

Contabo customer can save time and install Docker while installing or re-installing the OS on their VPS, VDS or Dedicated Server:

Reminder: Backup your data first before re-installing your OS, there are no automatic backups performed.

2 Downloading the Container Image

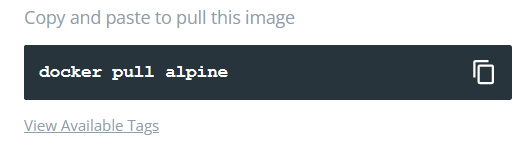

There are public and private Docker repositories (libraries) where developers can browse and download an image.

The most popular public repository is Docker Hub. The repository already includes a command that downloads the image to the computer, for instance:

Docker Hub aside, many companies (including us) run private repositories for their own internal images.

3 Working with Containers

Once the developer downloads and executes the image, they can upload the data inside of the container or edit various parameters:

https://docs.docker.com/get-started/

4 Uploading the Work into a Repository

Once the work is done, the developer exports the image to the respective repository. Then they can just send a link to their colleagues or publish the image for everyone to use.